Work is always measured in some way. If you are doing repetitive work, the tendency is to measure the number of repetitions, like the pounds of fruit picked by a field worker in an hour or a day. If you are doing knowledge work, the tendency is to simply measure hours of work spent on the problem, assuming that a) the person doing the work has the necessary set of skills and a comprehensive knowledge base of the subject at hand and b) they spent enough time to evaluate the problem sufficiently and provide a balanced solution. If you are doing time and/or payment-dependent contract work, the main consideration is did you complete the work assigned in the contracted period and at the specified cost?

This all seems relatively benign and normal in the world of work because it assigns a value to work that can then be measured and evaluated in various ways. Did you pick more fruit this week than last? Did you solve more problems in this quarter? Did you complete all your contracted work on time and budget?

Software development is, of course, one of the most valuable types of knowledge work being done globally today. Since development is usually the domain of teams and is at this time, largely done with some form of agile and/or lean methodologies, the measurements tend to be a combination of individual and team metrics applied by various means. Some common metrics are:

- Lines of Code – often expressed in 1,000’s of lines per person month with variations for the size of team and duration of the project.

- Cost Modeling – the total cost of a project, against the number of individuals on the team(s) required and length of the project. Formulas vary depending on cost per hour spread among individuals (role and experience), method of averaging, productive hours vs. billed hours, total lines of code in finished work, quality measures, efficiency measures and more…

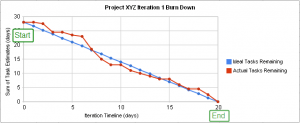

-

Standard Burn-Down Chart

Function Points – measures of the amount of functionality or user stories (and the points assigned to the story) completed by teams within a period of time (usually measured across sprints and the project as a whole) completed and released. Not all measures of function points completed include the successful release to production.

When we get beyond these three common methods and their minor variations, we start getting into more complex models (!!) that tie team performance, quality, project length, man-hours, adherence to production and methodology standards and complex team models in DevOps implementations.

We Have Questions

At the end of the exercise, however, it is done, we end up with some sort of measurement of the work of software development. But as we do, we need to ask ourselves a few important questions:

What does our measurement tell us? What is it not telling us? If we are measuring lines of code or functions, is the measurement telling us if the work was efficient or just an output meant to produce more (or a given number of) lines/functions over a given period? Were the lines of code or functions really efficient and useful? Programming is very comparable to writing. Some writers are more efficient at expressing thought and others see a more elegant way to express a thought without addressing it directly. Some programmers are more efficient because they reuse code multiple times throughout the source with efficient use of variables. Some functions in a program are critical and getting them right is tough, requiring time and effort. Other functions are simply «eye-wash» and don’t have a real bottom-line value. There as many ways to game the system as there are ways to produce better code with fewer lines. Every programmer, every team, and every project has different dynamics depending on the language being used, the type of environment, the technical problem being dealt with and many other factors. If you use a pure «number of X metric,» you have to be clear about what it means and doesn’t address.

What does our measurement tell us? What is it not telling us? If we are measuring lines of code or functions, is the measurement telling us if the work was efficient or just an output meant to produce more (or a given number of) lines/functions over a given period? Were the lines of code or functions really efficient and useful? Programming is very comparable to writing. Some writers are more efficient at expressing thought and others see a more elegant way to express a thought without addressing it directly. Some programmers are more efficient because they reuse code multiple times throughout the source with efficient use of variables. Some functions in a program are critical and getting them right is tough, requiring time and effort. Other functions are simply «eye-wash» and don’t have a real bottom-line value. There as many ways to game the system as there are ways to produce better code with fewer lines. Every programmer, every team, and every project has different dynamics depending on the language being used, the type of environment, the technical problem being dealt with and many other factors. If you use a pure «number of X metric,» you have to be clear about what it means and doesn’t address.- Is our measurement comparable? Can it be used over the long term or compared across projects or teams? Frequently, this is where the complex math becomes part of the equation and frankly, begins to fail to do its job effectively. The more we try to «normalize» measurements across a single project, between teams, and across projects and teams, the more subjective the outcome becomes. Were the technical hurdles encountered at the beginning of Project A really comparable to Project B? If we decide there is a significant difference, how do we adjust for it? Did Team A experience more changes in team composition than Team B? How do we account for that issue? Are we comparing skill levels, experience, time to reach «normal productivity» or some other factor? Was Team A really suited to provide the skills and experience needed for the given project? Did their productivity fail to reach the levels expected because of issues outside of their control? In most cases, the more you try to normalize between measures of productivity between individuals, teams, and projects, the less sure you can be you have a reasonable common measure. And in the end, the question becomes, is a comparable metric what we really need?

Isn’t any measurement better than no measurement? Can we afford to fly blind? Certainly, if you have an outsourced team, multiple teams, and/or multiple projects you have to begin to understand why some projects seem to work well and some don’t. You need to be able to judge if a project is going off the tracks so you can get it back in line before the problem becomes critical. Finding ways to measure performance and productivity would seem to be the best tool to address the common issues in software development projects. But if the measurements we are using aren’t really addressing the problems we have, how are they helping us? Are they really more than just busy work for managers and bean counters?

Isn’t any measurement better than no measurement? Can we afford to fly blind? Certainly, if you have an outsourced team, multiple teams, and/or multiple projects you have to begin to understand why some projects seem to work well and some don’t. You need to be able to judge if a project is going off the tracks so you can get it back in line before the problem becomes critical. Finding ways to measure performance and productivity would seem to be the best tool to address the common issues in software development projects. But if the measurements we are using aren’t really addressing the problems we have, how are they helping us? Are they really more than just busy work for managers and bean counters?

The more we look at the problem, the more we begin to understand that measurement for the sake of monitoring «something» isn’t really useful. But on the other hand, measuring any aspect of software development is better than monitoring nothing at all and simply hoping everything will work out in the end. Even a weak measurement provides some level of confidence if it is used consistently and we understand its shortcomings well enough.

In agile teams, there is another, more important way to look at things. If we give the team simple methods they agree on and understand, like story points and burn down charts, they will know when they are performing well and when they are pushing too large a boulder up a hill. Inside the team, they know who is consistently performing and producing efficient code with fewer problems. The team knows when its backlog is too great to finish in the allotted time. Team members learn quickly who can be asked for pointers on how to approach a problem properly and who cannot be depended on reliably. We can stand around, pointing out issues with the common ways to measure agile team performance, but their value is those common agile methods are basically useful to the team to understand where they stand in relation to the work ahead of them and their quality performance. Where we get into trouble is when we try to extract the common measures and try to draw larger conclusions from them.

The Reality is – Communication is the Key

If we get past trying to use performance metrics outside of a single project and narrow our focus to what they do for a team inside of a project, we begin to see their value more clearly. The team knows what is going on. They may attempt to fool themselves or others, but in the end, they can see the light in the tunnel and they know if it is a successful end to the project or an approaching train. The problem is to get them to trust each other and the larger team enough to surface the problems they see as soon as they see them, without fear.

The level of trust and responsibility required for open communication and collaboration is perhaps the largest problem faced in software development projects. It is the «why» behind the invention and popularity of the agile and lean methodologies in wide use today. Conversely, the larger the organization, the more critical and costly the project is to the organization, the less likely it is that the team will feel and be enabled to inquire and speak out about the problems they believe they are facing. In a large organization, metrics are the shorthand to avoid having «high touch» with every team and individual. In a costly, critical project, metrics are meant to be the «truth-tellers» to assure stakeholders that things are well controlled, even though everyone knows that metrics can be gamed without much problem. And the more the metrics are relied on, instead of the knowledge inside of the team, the more likely it is the project will get out of hand before the problems are addressed.

Responsibility. Trust. Communication.

So what is the bottom line on measuring performance and productivity in software development projects?

- Measurement is most useful inside the software development team itself,

where the knowledge resides about what is really going on from day today. Everywhere else, it is just as likely to be smoke and mirrors as it is, to be honest, and useful – depending on who is using the metrics and for what purpose. If the team doesn’t understand the metrics applied and can’t use them to better understand where they are in relation to the work to be done, their quality performance and general efficiency as a team, the metrics will fail to give back their primary value – keeping the team and project on track.

-

The agile methodology relies on individual and team responsibility, trust and communication. If these three points cannot be achieved within a team, agile and scrum are just a bunch of processes and procedures that may or may not be helpful in organizing work. If the team is just «going through the motions» in taking responsibility for tasks, assessing and managing their backlog, and participating in standups and retrospectives, additional metrics and measurement will not provide much value. They will provide information to a larger audience too late to enable outside forces to do more than triage injuries to the project.

- Working through what may appear to be problems or what may seem to be a simple solution requires peer-level coaching and communication, not blame. If we understand that the team itself really knows what is going on – getting them to assess the problem honestly and figure out how to address it requires something other than pointing fingers, avoiding issues and top-down communication. It also means that the team needs to take responsibility, as soon as anyone recognizes a problem could be on the horizon, to consider it seriously and determine both the size of the problem and solutions that could be used to address it. And so what if the team raises an issue that may turn out to be nothing of great importance? We’ve been called to action to look at it and we will all come out of the conversation wiser. If everyone in the conversation addresses each other as a peer, rather than hierarchically, there is a good reason to think that the next time a problem is encountered or perceived, it will be openly addressed, rather than swept under the rug.

Three points to consider and use in conjunction with standard agile and scrum-based tools. You can use more and you can draw all sorts of conclusions from the systems you bring together. But in the end, if they don’t bring immediate value back to a current project, what value are they really serving?

Looking for a development team? Contact us we can help you!

Scio provides nearshore software development services for our client base in North America. We work closely with our clients to ensure that the projects we are involved in have the level of communication and understanding needed to reach successful outcomes. If you have a project where you think we could help, please contact us. We would be happy to discuss your needs.